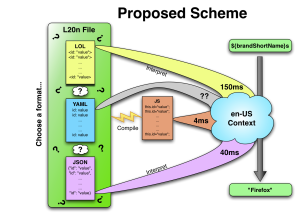

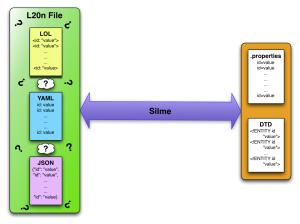

L20n (for localization, 2.0) aims to empower localizers to describe complexities and subtleties of their language: gendered nouns, singular/plural forms, and just about any other quirk that might exist in the grammar. Like DTD and .properties formats, which we currently use to encode localizable strings, l20n objects associate entity IDs with string values. Localizers translate these values into the target language. L20n has all the power of the current framework, plus a lot more, and it’s just as simple to use (provided we choose the right format!). You can find some examples of l20n in action here. In the past weeks, we’ve experimented with JSON (JavaScript Object Notation) as a file format to represent localizable objects in hopes of achieving better performance by leveraging the new built-in JSON parser in Firefox. The performance gains were substantial, but still not enough to compete with the current DTD/properties framework in terms of speed. We’ve since adopted a new scheme to compile our l20n source files into native JavaScript (credit to Staś Małolepszy for suggesting this). This compilation now guarantees good performance independent of our choice of source file format. I will discuss the specifics of compilation in an upcoming post; this post will focus on the relative merits of the various formats under consideration.

Meet the contenders

LOL files

Before experimenting with JSON, we developed a novel format for l20n, playfully titled “localizable object lists” (.lol files). A lol file looks like a hybrid of DTD and .properties formats, with entities delimited by angle brackets and colons separating keys from values. Here’s a simple example, constructed from brand.dtd:

<brandShortName: "Minefield">

<brandFullName: "Minefield">

<vendorShortName: "Mozilla">

<logoCopyright: " ">

In this simple case, the lol file looks a lot like the original brand.dtd, which looks like this:

<!ENTITY brandShortName "Minefield">

<!ENTITY brandFullName "Minefield">

<!ENTITY vendorShortName "Mozilla">

<!ENTITY logoCopyright " ">

We lost the !ENTITY declaration and added a colon, but otherwise the lol format should look familiar. What if we want to do something more complex, like define an entity that involves a gendered noun? Here’s a German example encoded in a lol file:

/* This entity references a gendered noun */

<complex[appName.gender]: {

male: "Ein hübscher ${appName}s.",

female: "Ein hübsches ${appName}s."}>

/* This is a gendered noun */

<appName: "Jägermeister"

gender: "male">

In the above example, we indicated the “complex” entity depends on the “gender” property of the “appName” entity. The ${…}s expander within the string is a placeholder that will be replaced with the value of “appName” (Jägermeister). Note that we can insert comments in the familiar /*…*/ style. If you’re curious to see more use cases for l20n and the lol format, be sure to check out the link above to Axel’s examples.

JSON

JSON is a well-known data exchange format. It’s simple to understand, and with implementations available in many different languages, simple to use. As mentioned above, our initial attempt to encode localizable objects in JSON was motivated by performance concerns. Even without a speed advantage, JSON still has some attractions, namely its existing implementations. Our JSON-based l20n infrastructure leverages Gecko’s built-in parser to do a lot of heavy lifting, meaning we have less code to maintain on our part. Plus, arrays and hashes, the fundamental datatypes available in JSON, are a natural fit for localizable objects. Still, JSON has some serious shortcomings, which we will see shortly.

As mentioned above, JSON is great for describing key-value pairs. Here’s the same brand.dtd example, now expressed in JSON:

{"brandShortName" : {"value" : "Minefield"},

"brandFullName" : {"value" : "Minefield"},

"vendorShortName" : {"value" : "Mozilla"},

"logoCopyright" : {"value" : " "}}

Our localizable objects in JSON feature a “value” attribute denoting the string to be displayed. This makes our JSON example slightly more verbose, along with the required quotes surrounding the keys. Now here’s the sample gendered-noun example from above, this time in JSON:

{ "complex" :

{"indices" : ["appName.gender"],

"value" : { "male" : "Ein hübscher ${appName}s.",

"female" : "Ein hübsches ${appName}s."}},

"appName" : {"value" : "Jägermeister",

"gender" : "male"}}

In JSON, we need to reserve some keywords for attributes, like “indices” here, to implement certain l20n features. Still, JSON works pretty well to express the structure of the object. One area where JSON doesn’t work so well is comments. In JSON, our top-level object is a hash that associates entity IDs with their definitions. There are a few apparent ways to integrate comments into this object:

- Assign each comment to the same identifier, e.g. “comment”.

- Assign each comment to a unique identifier, e.g. “comment0”, “comment1”, etc.

- Don’t allow top-level comments: each comment has to be an attribute of an entity

Option 1 makes sense for humans writing JSON, and it’s valid, but a little strange.

Option 2 is a little painful when writing the file, especially when it comes to inserting new comments. This scheme would make it possible to reference specific comments, which might be useful.

Option 3 is somewhat of a straw-man but still deserves some consideration. Most comments in a localizable file give instructions for how to translate a specific entity, and now that relationship would be explicitly enforced. This form of comment is likely the best choice in most situations, but it probably is too restrictive to make it the only choice.

Another shortcoming in JSON is that it doesn’t support multiline strings. This is a serious problem when it comes to presenting long strings to localizers, since line breaks aren’t just for readability; they also give important cues for localization about logical separation between thoughts. As it turns out, the native JSON parser built into Gecko is perfectly content to accept multiline strings, but most other parsers will report an error.

YAML: A better JSON?

YAML is a data serialization language that is a superset of JSON. It supports comments, multiline strings, and user-defined data types. On the downside, it’s not nearly as well-known as JSON, it’s considerably more complex, and it’s not already built in to the Mozilla platform.

Here’s our first example from above, now in YAML:

brandShortName: Minefield

brandFullName: Minefield

vendorShortName: Mozilla

logoCopyright:

And the second example:

complex:

indices: appName.gender

value:

male: Ein hübscher ${appName}s.

female: Ein hübsches ${appName}s.

appName: {value: Jägermeister, gender: male}

YAML has the same logical structure as JSON with a much cleaner look, since indentation can be used instead of curly braces to denote objects, and it doesn’t require strings to be delimited with quotes. That’s another attractive feature, since it reduces the chance for errors with improperly escaped quotes within strings, and missing trailing quotes, that cause a lot of frustration. The less rosy side of the picture is that we don’t have a YAML parser that we can simply drop into place like we did with JSON, so it would require a lot of work on our part to get it up and running. YAML does have a fair number of implementations floating around, but development seems to have stalled on many of these. For example, this JavaScript implementation hasn’t seen any updates in nearly 5 years.

Summary

So far we’ve seen examined three choices: LOL, JSON, and YAML. The first was designed specifically for l20n, so naturally it encodes the complex features of l20n most gracefully. The remaining two are established formats with implementations available in many different programming languages (JSON to a far greater extent than YAML). The lack of comments and multiline strings is probably enough to eliminate JSON from the discussion, since these deficits outweigh any advantage of interoperability, leaving us with LOL and YAML. If you’d like to make a case for one of these, or any other format dear to your heart, don’t hesitate to leave a comment! We’d love to get your input.

Posted by jeremyhiatt

Posted by jeremyhiatt